Paper Reading Notes on ICLR-2022 Conference

I was fortunate to find some time and virually attend some of the poster and oral sessions in ICLR-2022. I’d like to share some the research works that I had read in the conference. Hope you may find such information helpful and get your interest to further read more papers in ICLR-2022. I broadly divided these papers into three categories: training strategy, symbolic learning and interpretability. Finally, I also make a list of certain papers that I’m interested but I did not find time to attend their sessions.

Table of Content

Training Strategy

- TRANS-ENCODER: Unsupervised sentence-pair modeling through self- and mutual-distillations

- Weighted Training for Cross-Task Learning

- Finetuned Language Models are Zero-Shot Learners

- Towards Understanding the Data Dependency of Mixup-style Training

- The Rich Get Richer: Disparate Impact of Semi-Supervised Learning

- AdaAug: Learning Class- and Instance-adaptive Data Augmentation Policies

Symbolic Learning and Structured Prediction

- Safe Neurosymbolic Learning with Differentiable Symbolic Execution

- Constraining Linear-chain CRFs to Regular Languages

Interpretability

Training Strategy

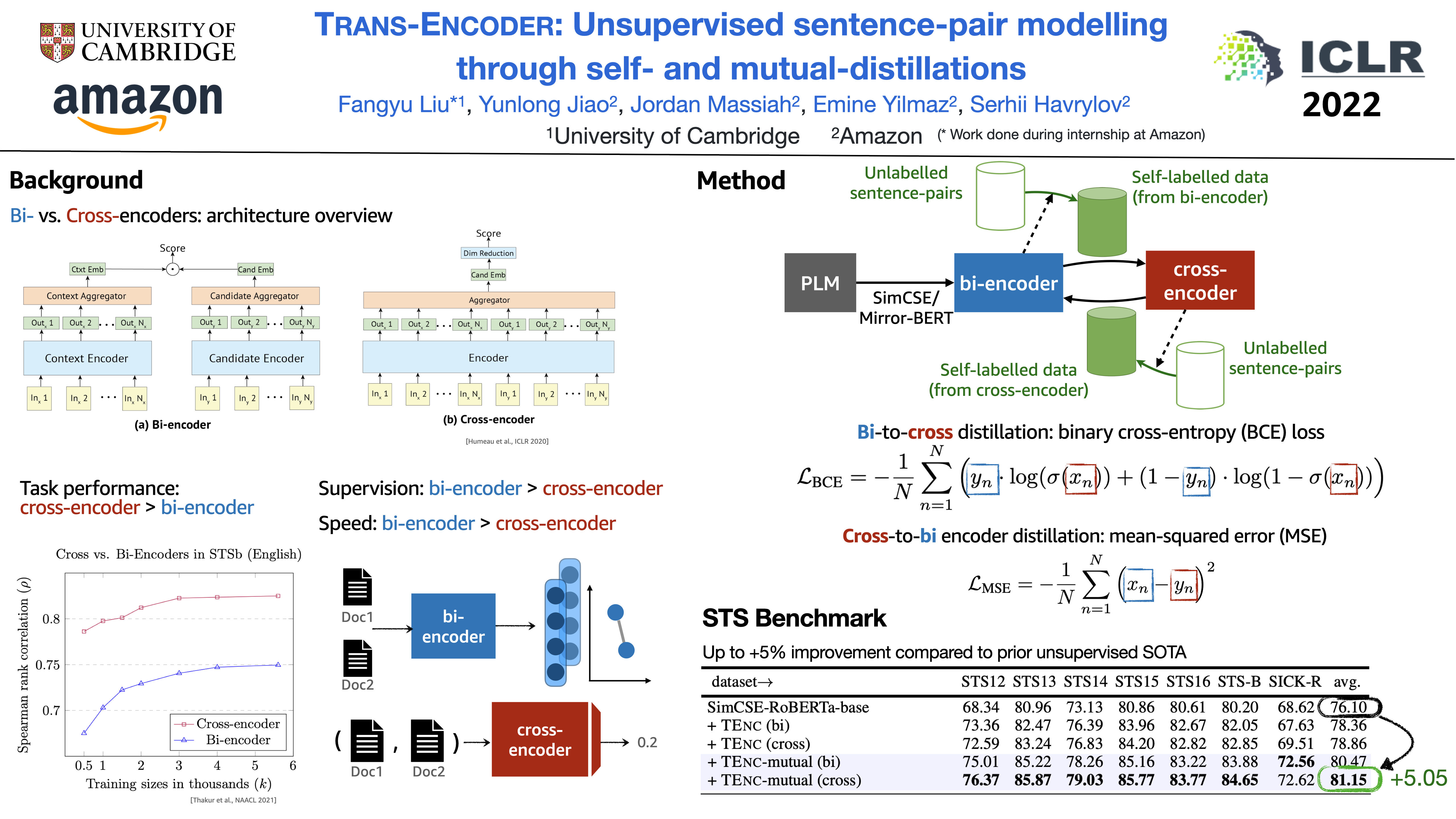

TRANS-ENCODER

The first research work I read is “TRANS-ENCODER: Unsupervised sentence-pair modeling through self- and mutual-distillations” by Fangyu Liu from University of Cambridge.

They proposed a distillation training mechanism to incorporate both bi-encoder and cross-encoder for unsupervised sentence representation learning.

Given the different advantages of bi-encoder and cross-encoder, they perform iterative distillation between the bi-encoder and cross-encoder. Such a method is able to improvement the performance of unsuperivsed STS by at most 5 points.

Some insights after talking to the authors:

- The amount of unlabeled pairs also matters as the performance would not always increase.

- Such a framework can be generalized to disitillation among multiple model architectures (\(\geq 2\)).

- There is another paper by Google (called “Model Soups”) mentions that averaging the parameters of models with different hyperparameters can further improve the performance. In this work, the author also mentioned to me that they try something similar and have certain improvements as well.

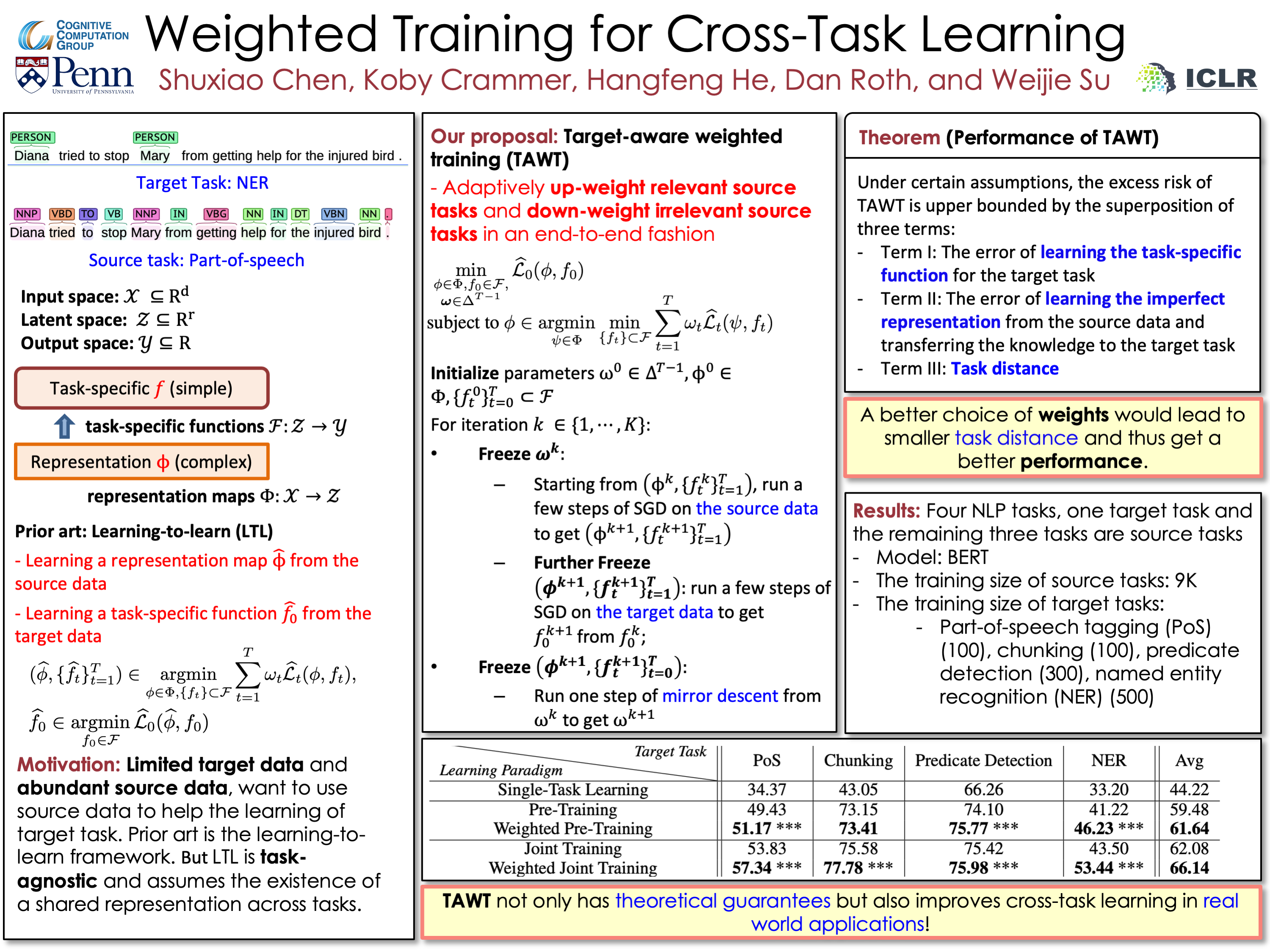

Weighted Training for Cross-Task Learning

This work is by Shuxiao Chen from University of Pennsylvania. They proposed a weighted training framework under the scenario that we have limited data in the target task but abundant data in the source tasks. In this poster, their example target task is name entity recognition (NER) while the source task can be part-of-speech (POS) tagging.

The training mechansim is pretty intuitve:

Adaptively up-weight relevant source tasks and down-weight irrelevant source tasks.

They have a theoretical proof (Theorem 3.1) in their paper to show this training scheme is able to converge and the error is bounded by certain variables.

(The mathematical proof is pretty long and readers can refer to the papers if interested.)

One of them is task distance, which measure how close the target task and source task are.

Of course, if the two tasks are far away from each other, the improvements would not be so significant as in the results.

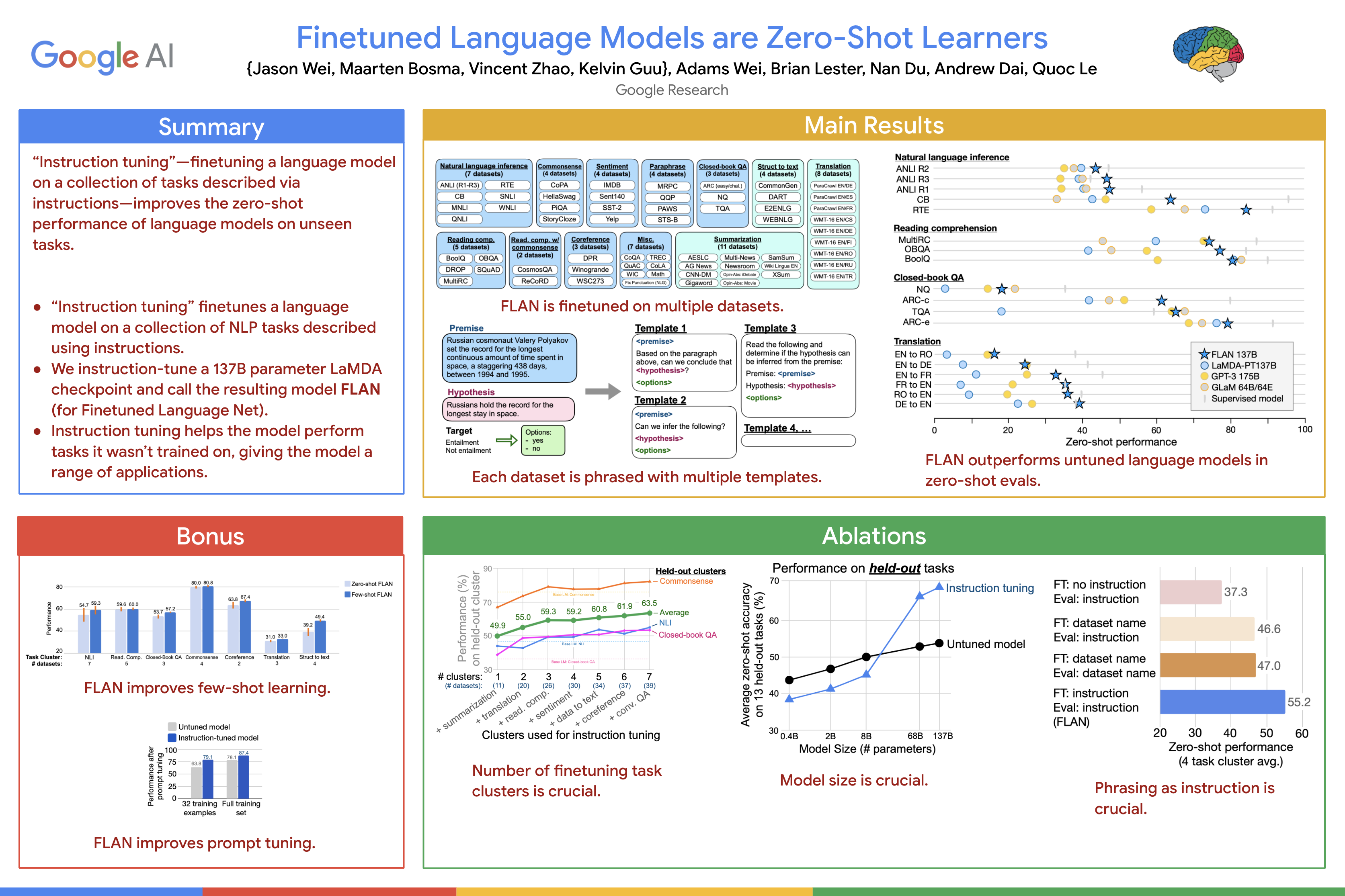

Finetuned Language Models are Zero-Shot Learners

I just attended this talk by Jason Wei. As shown in the summary, researchers from Google show that we can obtain better zero-shot performance by finetuning the (large) language models on other tasks with instruction tuning. Basically, we design some instruction templates as shown in the center of the poster, and finetune the model. In my opinion, the instructions are pretty similar to prompts in terms of implementation.

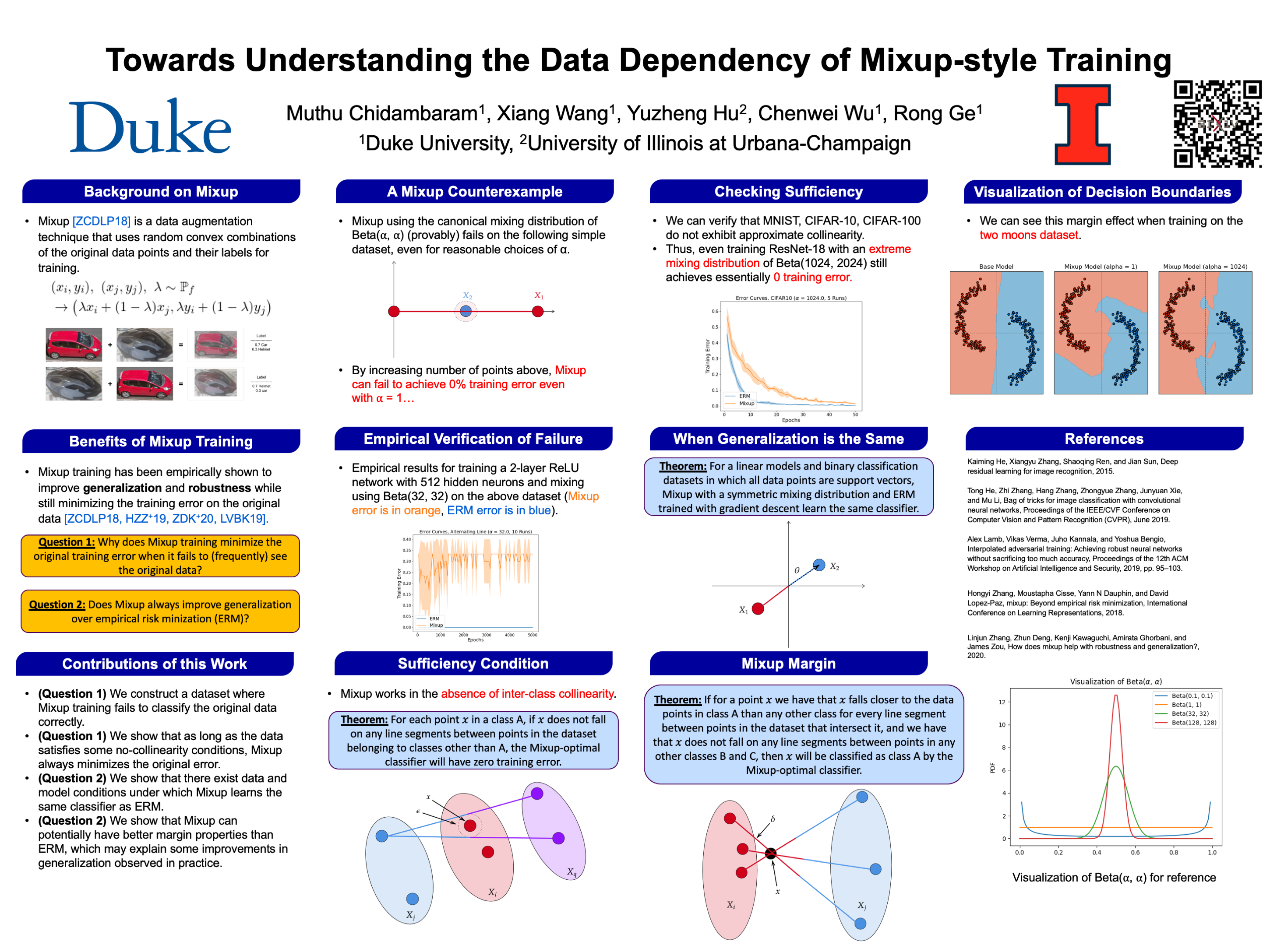

Towards Understanding the Data Dependency of Mixup-style Training

This work by Muthu investigates the vanilla mixup-style training and gives some insights on when mixup fails and succeed.

By reading the background in this poster, we know that we can mix up the two labels during training for the mixup representation.

However, there would be a problem if such a representation actually has a different label other than these two labels, which they call inter-class collinearity.

Interestingly, they also show that we can learn a better boundary using the mixup-style training in the visualization (with \(\alpha =1\)).

The hyperparmeter \(\alpha\) corresponds to the mixing density in our training data. If we have a higher alpha, that means we have a higher concentration on mixup. This is also the case why the last plot has sort of sharper boundary than the plot with \(\alpha =1\).

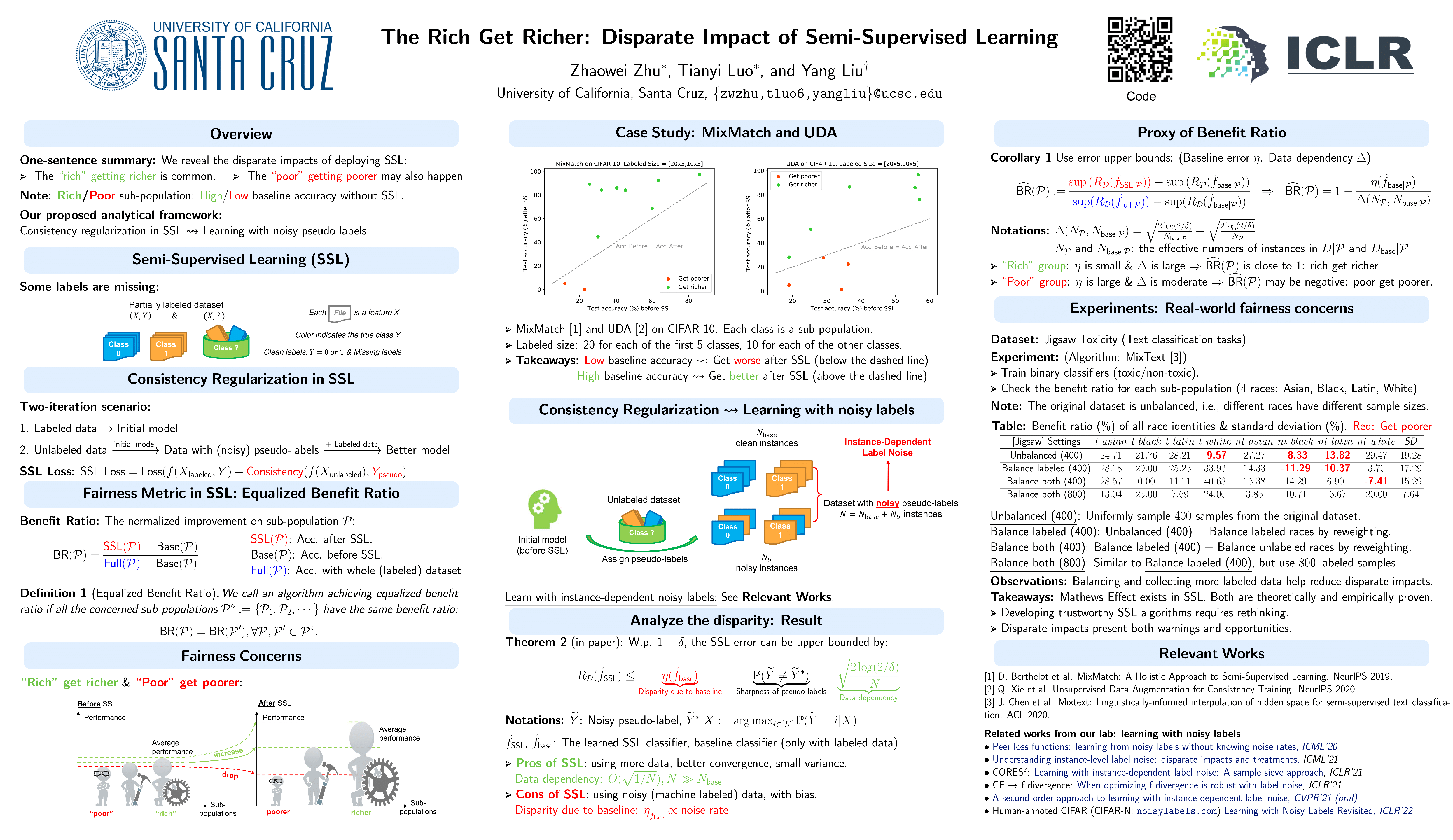

The Rich Get Richer: Disparate Impact of Semi-Supervised Learning

This work also shows the disparate impacts of deploying semi-supervised learning (SSL).

“Rich get richer and poor get poorer” means that a model would achieve lower accuracy after SSL if the model originally gives us “low” accuracy.

In other words, we would get higher accuracy after SSL if the original accuracy is also “high”.

They quantified the comparison by a benifit ratio as shown in the poster.

They adopt a simple SSL framework and prove that the error is bounded by the disparity of the baseline, which strongly relates to the noise rate of the unlabeled data.

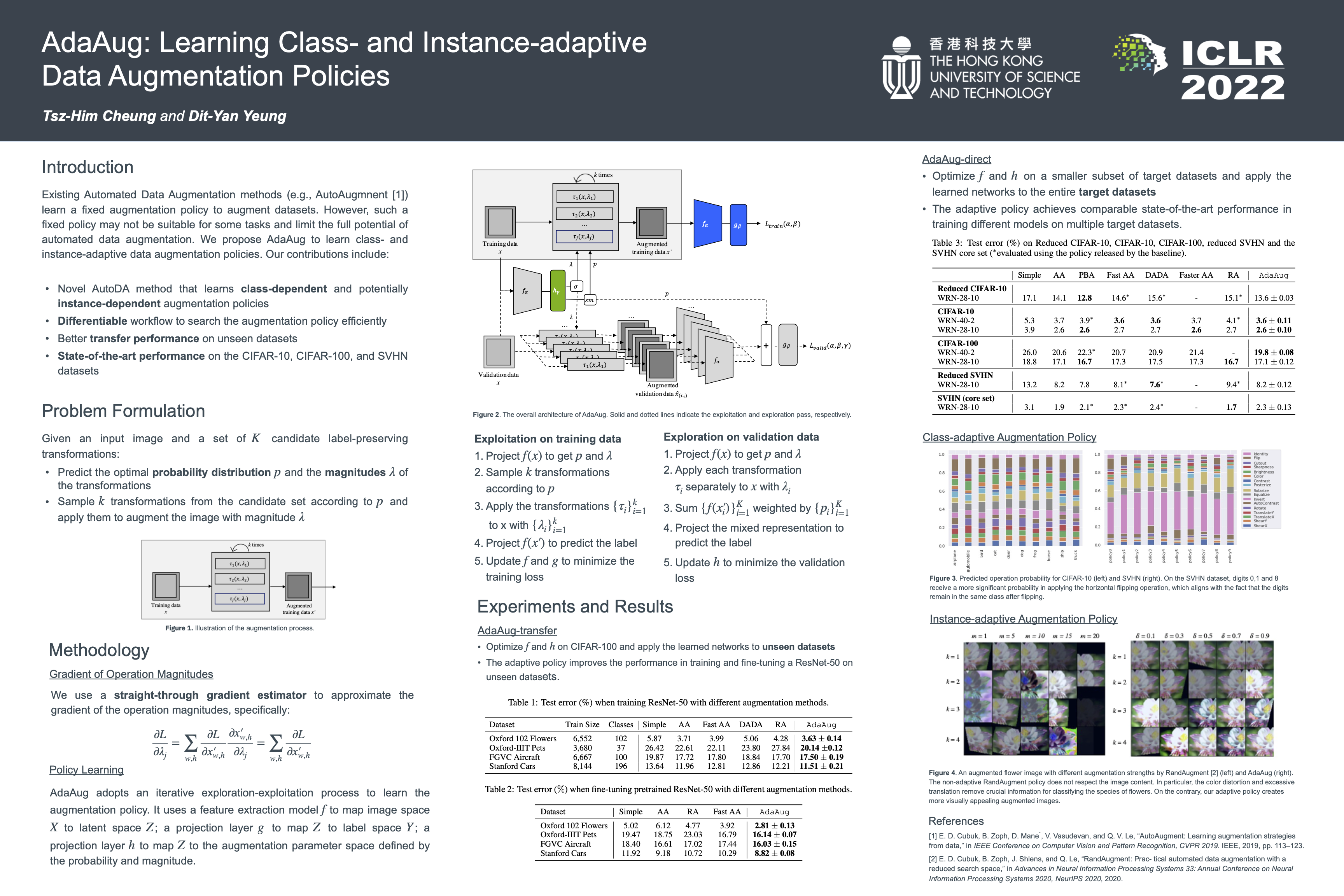

AdaAug: Learning Class- and Instance-adaptive Data Augmentation Policies

This work on data augmentation is by Tsz-Him Cheung from HKUST. I like the key idea that they train a parameterized network on the validation dataset to learn the hyperparameters. Specifically, in Figure 2, the network parameterized by \(h_\gamma\) is used to predict the hyperparameters for selecting the augmentation strategies.

- They train the model on the training data as shown in the upper architecture, but freezing the parameters of \(h_\gamma\).

- They train the parameters of \(h_\gamma\) on the validation data, but freezing the parameters of those blue modules. In this way, they can try to select the best augmentation strategy in a trainable way. But of course, we might doubt that the amount of validation data could be a potential issue.

Symbolic Learning and Structured Prediction

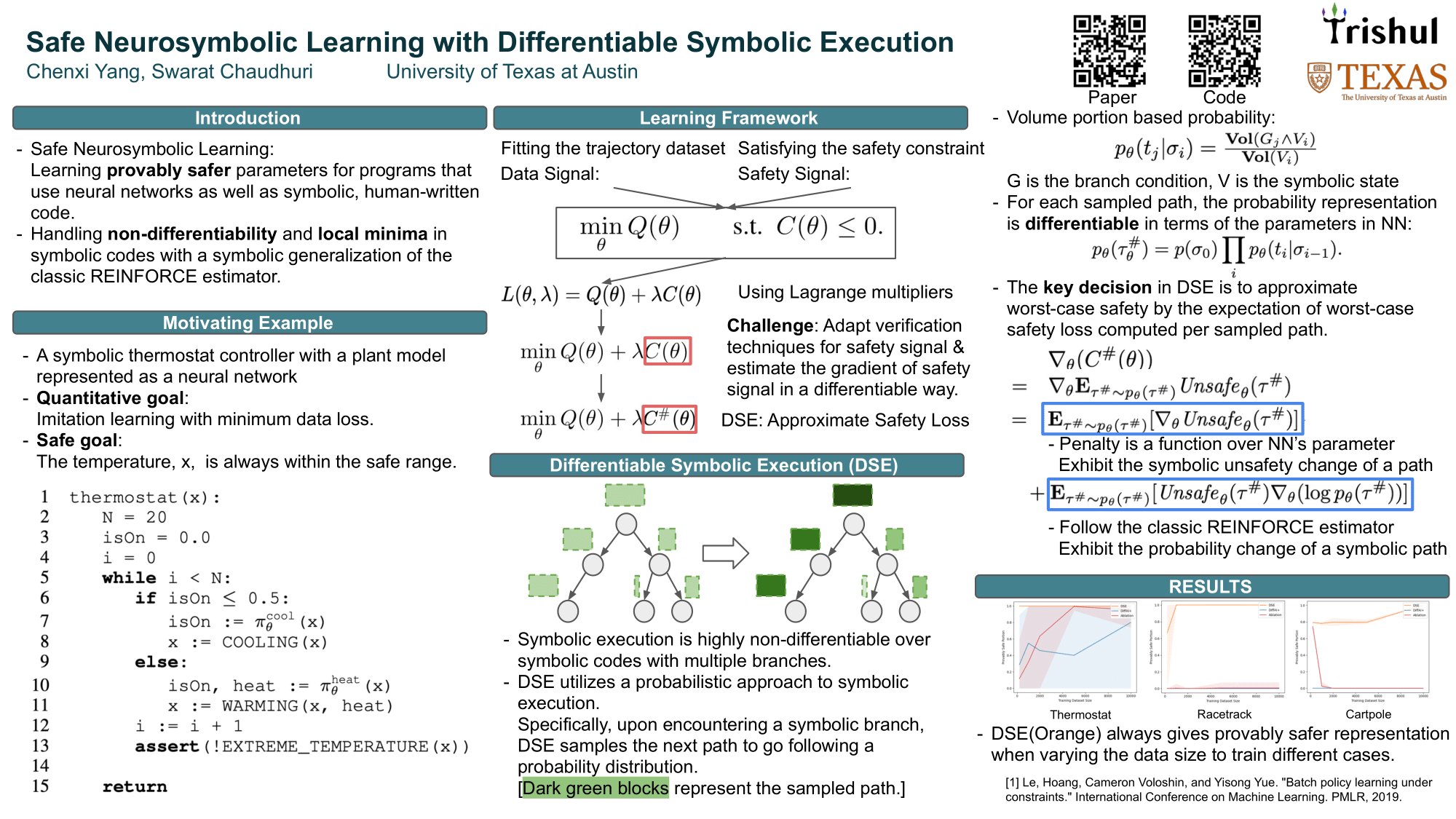

Safe Neurosymbolic Learning with Differentiable Symbolic Execution

Different from symbolic reasoning in NLP, this work is about learning the neural network within an executable program. To be even more specific, this is called “symbolic transition system” (Manna & Pnueli, 2012). This work is by Chenxi Yang from the University of Texas at Austin.

Problem: learn the neural network parameters embedded in a program and minimize the error (\(\min Q(\theta)\)) to fit the training dataset.

Method: layout the symbolic execution graph and sample the trajectory over this graph. The sampling process also allows us to train the whole system in differentiable way though the system has non-differentiable operations.

Insight: As we can see in the final results, this approach is 100% safe on the thermostat dataset even without any training data. Because we can directly sample from the execution graph rather than relying on the training data.

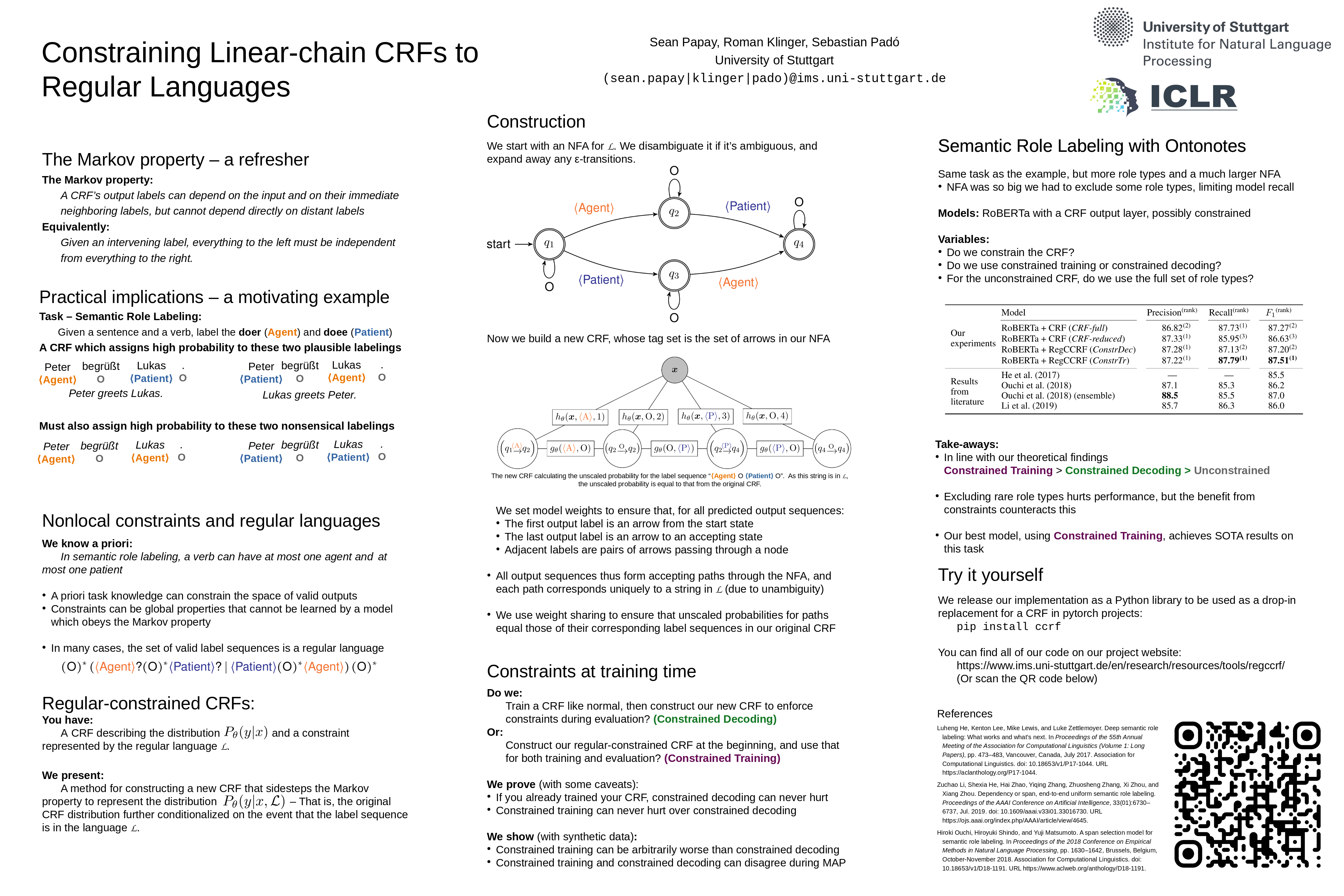

Constraining Linear-chain CRFs to Regular Languages

I’m always interested in structured prediction, the title attracts me to have a chat with the author, Sean Papay from University of Stuttgart. They start with a finite state automata and build the CRF graphical model according to that “language”. To be more specific, there are certain constraints, for example:

- The state \(q_2\) cannot go to state \(q_4\) with an action of

Patient.

IMO, it is also pretty similar to a constrained CRF where we design certain constriants with our prior knowledge. But I didn’t fully read the papers, I could be misunderstanding some of the key content here.

Interpretability

How Do Vision Transformers Work?

This paper presented some empirical findings through some pretty nice figures. I listed some of their key findings here:

- Figure 1: ViT has smoother loss lanscape than ResNet because of the

softmax. - The learning trajectory of parameters of ViT is also smooth compared to the one in ResNet.

- Multi-head Self Attentions (MSAs) are low-pass filter but Convolutions are high-pass filter. The original paper also compare more state-of-the-art architectures such as Swin Transformers. Read the paper and it might be helpful if you are looking for a vision encoder which is suitable for a specific dataset.

Discovering Latent Concepts Learned in BERT

The last poster I read in the conference is more about findings in BERT. They perform clustering for the word presentations given by BERT/Roberta. The same words in different contexts might have different semantic meaning as well. Similar to some previous findings, they also show the representations in the upper layers convey more semantic information while the representations at lower level convey syntactic information. They manually annotate a dataset with 174 concept labels and a total of 1 million annotated instances. Though they did not have any downstream experiments, the research efforts here is apprecitated and could benefit our research community.

Others

Here comes a list of papers in my mind while going through the above posters.

- Pyraformer: Low-Complexity Pyramidal Attention for Long-Range Time Series Modeling and Forecasting

-

Large Language Models Can Be Strong Differentially Private Learners

- This looks like a new technique to me: “Differentially Private (DP) learning”. As as explained by a friend Xiaosen Zheng, differentially private is about learning a model that does not expose the privacy. For example in membership inference attack, a good attacker can always tell if a sample is from the training set. Differentially private learning is trying to defend from this attacks. This paper show that the hyperparameters are the reason of why previous DP methods fail.

- Autoregressive Diffusion Model

- Self-Supervised Inference in State-Space Models

- Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling

- A Fine-Tuning Approach to Belief State Modeling

- On the limitations of multimodal VAEs

- Differentiable DAG Sampling

- Knowledge Infused Decoding

- Self-supervised Learning is More Robust to Dataset Imbalance

- Enhancing Cross-lingual Transfer by Manifold Mixup

- How Do Vision Transformers Work?